Whisper

Whisper: Audio Transcription and Subtitle Extraction

Overview

Let's try to use FFmpeg in combination with OpenAI's Whisper to extract transcriptions and generate subtitles from audio and video files. FFmpeg handles media processing while Whisper provides state-of-the-art speech recognition.

Prerequisites

Required Software

- FFmpeg: Media processing framework

- Whisper: OpenAI's automatic speech recognition system

- Python 3.7+: Required for Whisper

Installation

Install FFmpeg

1# Windows (using chocolatey)

2choco install ffmpeg

3

4# macOS (using homebrew)

5brew install ffmpeg

6

7# Ubuntu/Debian

8sudo apt update

9sudo apt install ffmpeg

10

11# Verify installation

12ffmpeg -version

Install Whisper

1# Install via pip

2pip install openai-whisper

3

4# Or install the latest development version

5pip install git+https://github.com/openai/whisper.git

6

7# Verify installation

8whisper --help

Basic Workflow

Method 1: Direct Audio/Video to Text

For files that Whisper can process directly:

1# Basic transcription

2whisper audio_file.mp3

3

4# Specify output format

5whisper video_file.mp4 --output_format txt

6

7# Choose model size (tiny, base, small, medium, large)

8whisper audio_file.wav --model medium

9

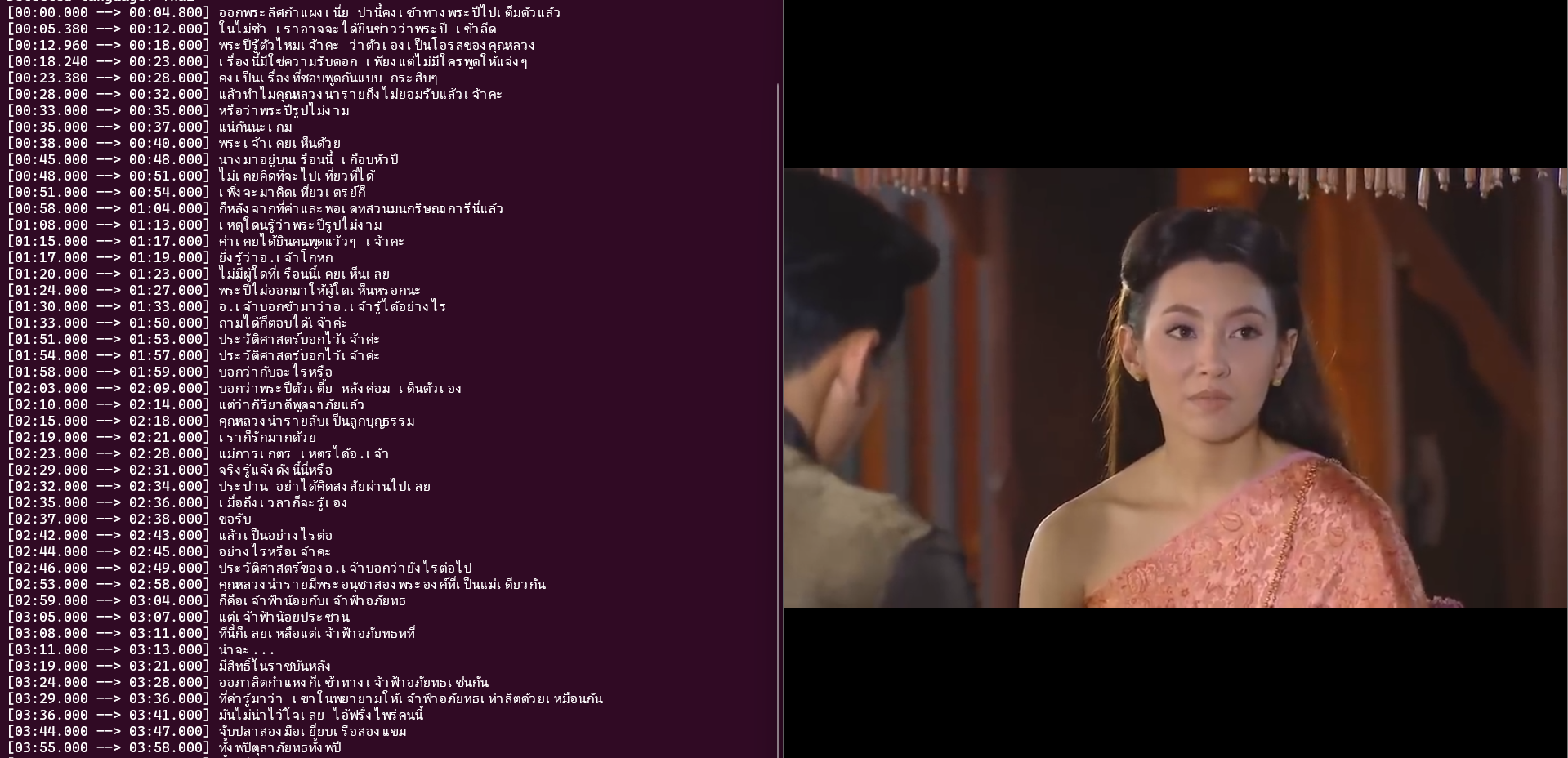

10# Specify language (optional, auto-detected by default)

11whisper audio_file.mp3 --language Thai

Method 2: FFmpeg + Whisper Pipeline

For better control or problematic file formats:

Step 1: Extract Audio with FFmpeg

1# Extract audio as WAV (recommended for Whisper)

2ffmpeg -i input_video.mp4 -vn -acodec pcm_s16le -ar 16000 -ac 1 audio_output.wav

3

4# Extract audio as MP3

5ffmpeg -i input_video.mkv -vn -acodec mp3 -ab 128k audio_output.mp3

6

7# Extract specific time range

8ffmpeg -i input.mp4 -ss 00:01:30 -t 00:02:00 -vn -acodec pcm_s16le audio_segment.wav

Step 2: Transcribe with Whisper

1# Basic transcription

2whisper audio_output.wav

3

4# Generate multiple formats simultaneously

5whisper audio_output.wav --output_format txt,srt,vtt,json

6

7# Use specific model and language

8whisper audio_output.wav --model large --language en --output_format srt

Output Formats

Available Output Formats

- txt: Plain text transcription

- srt: SubRip subtitle format

- vtt: WebVTT subtitle format

- json: Detailed JSON with timestamps and confidence scores

- tsv: Tab-separated values with timestamps

Examples

1# Generate SRT subtitles

2whisper video.mp4 --output_format srt

3

4# Generate multiple formats

5whisper audio.wav --output_format txt,srt,vtt,json

6

7# Custom output directory

8whisper audio.mp3 --output_dir ./transcriptions --output_format srt

Advanced FFmpeg Preprocessing

Audio Quality Optimization

1# Normalize audio levels

2ffmpeg -i input.mp4 -af "dynaudnorm" -acodec pcm_s16le normalized_audio.wav

3

4# Remove background noise (basic)

5ffmpeg -i input.mp4 -af "highpass=f=200,lowpass=f=3000" filtered_audio.wav

6

7# Boost volume

8ffmpeg -i input.mp4 -af "volume=2.0" louder_audio.wav

Handling Multiple Audio Tracks

1# List audio streams

2ffmpeg -i input.mkv

3

4# Extract specific audio track (e.g., track 1)

5ffmpeg -i input.mkv -map 0:a:1 -vn audio_track2.wav

6

7# Mix multiple audio tracks

8ffmpeg -i input.mkv -af "amix=inputs=2" mixed_audio.wav

Batch Processing

1# Process multiple files (bash)

2for file in *.mp4; do

3 ffmpeg -i "$file" -vn -acodec pcm_s16le "${file%.*}.wav"

4 whisper "${file%.*}.wav" --output_format srt

5done

6

7# Windows batch processing

8for %f in (*.mp4) do (

9 ffmpeg -i "%f" -vn -acodec pcm_s16le "%~nf.wav"

10 whisper "%~nf.wav" --output_format srt

11)

Whisper Model Selection

Available Models

| Model | Parameters | English-only | Multilingual | Required VRAM | Relative Speed |

|---|---|---|---|---|---|

| tiny | 39 M | ✓ | ✓ | ~1 GB | ~32x |

| base | 74 M | ✓ | ✓ | ~1 GB | ~16x |

| small | 244 M | ✓ | ✓ | ~2 GB | ~6x |

| medium | 769 M | ✓ | ✓ | ~5 GB | ~2x |

| large | 1550 M | ✗ | ✓ | ~10 GB | 1x |

Model Selection Guidelines

1# Fast transcription (lower accuracy)

2whisper audio.wav --model tiny

3

4# Balanced speed/accuracy

5whisper audio.wav --model base

6

7# High accuracy (slower)

8whisper audio.wav --model large

9

10# English-only models (slightly better for English)

11whisper audio.wav --model base.en

Common Use Cases

1. YouTube Video Transcription

1# Download with yt-dlp, extract audio, transcribe

2yt-dlp -x --audio-format mp3 "https://youtube.com/watch?v=VIDEO_ID"

3whisper "VIDEO_TITLE.mp3" --output_format srt

4

5# Or direct processing if file is compatible

6whisper "$(yt-dlp --get-filename -x --audio-format mp3 'VIDEO_URL')" --output_format srt

2. Podcast Episode Processing

1# Extract and normalize audio

2ffmpeg -i podcast_episode.mp3 -af "dynaudnorm" -ar 16000 normalized_podcast.wav

3

4# Transcribe with timestamps

5whisper normalized_podcast.wav --output_format json,srt --model medium

3. Meeting Recording Transcription

1# Extract audio from meeting recording

2ffmpeg -i meeting_recording.mp4 -vn -ar 16000 -ac 1 meeting_audio.wav

3

4# Transcribe with word-level timestamps

5whisper meeting_audio.wav --output_format json --model base --word_timestamps True

4. Foreign Language Content

1# Auto-detect language

2whisper foreign_audio.mp3 --model medium --output_format srt

3

4# Specify language for better accuracy

5whisper thai_audio.mp3 --language Thai --model medium --output_format srt

6

7# Translate to English while transcribing

8whisper foreign_audio.mp3 --task translate --output_format srt

Optimization Tips

Performance Optimization

1# Use GPU acceleration (if available)

2whisper audio.wav --device cuda

3

4# Specify number of CPU threads

5whisper audio.wav --threads 4

6

7# Use faster models for real-time processing

8whisper audio.wav --model tiny.en --fp16 False

Quality Optimization

1# Improve accuracy with audio preprocessing

2ffmpeg -i noisy_audio.mp3 -af "highpass=f=80,lowpass=f=8000,dynaudnorm" clean_audio.wav

3whisper clean_audio.wav --model large --temperature 0

4

5# Use temperature for consistency

6whisper audio.wav --temperature 0 --best_of 5

Troubleshooting

Common Issues

File Format Problems

1# Convert unsupported formats

2ffmpeg -i input.webm -acodec pcm_s16le -ar 16000 output.wav

3ffmpeg -i input.flac -acodec mp3 output.mp3

Memory Issues

1# Use smaller model

2whisper large_file.wav --model tiny

3

4# Process in segments

5ffmpeg -i large_file.mp4 -f segment -segment_time 300 -vn segment_%03d.wav

Poor Transcription Quality

1# Try different models

2whisper audio.wav --model medium # vs base or large

3

4# Specify language explicitly

5whisper audio.wav --language en

6

7# Adjust audio quality

8ffmpeg -i input.mp3 -af "volume=2.0,highpass=f=100" enhanced.wav

Advanced Features

Custom Vocabulary and Prompts

1# Use initial prompt for context

2whisper audio.wav --initial_prompt "This is a technical discussion about machine learning"

3

4# For names and technical terms

5whisper audio.wav --initial_prompt "Speakers: Peerasan Buranasanti. Topic: Media Streaming"

Fine-tuning Output

1# Suppress non-speech tokens

2whisper audio.wav --suppress_tokens "50257"

3

4# No speech threshold (adjust for silence detection)

5whisper audio.wav --no_speech_threshold 0.6

6

7# Compression ratio threshold

8whisper audio.wav --compression_ratio_threshold 2.4

Integration with Video Editing

1# Generate subtitle file for video editing

2whisper video.mp4 --output_format srt --model medium

3

4# Burn subtitles directly into video

5whisper video.mp4 --output_format srt

6ffmpeg -i video.mp4 -vf "subtitles=th-video.srt" video_with_subs.mp4

Complete Example Workflows

Workflow 1: Conference Talk Processing

1#!/bin/bash

2# conference_transcription.sh

3

4INPUT_FILE="$1"

5OUTPUT_NAME="${INPUT_FILE%.*}"

6

7echo "Processing: $INPUT_FILE"

8

9# Step 1: Extract and optimize audio

10ffmpeg -i "$INPUT_FILE" \

11 -vn \

12 -af "dynaudnorm,highpass=f=80,lowpass=f=8000" \

13 -ar 16000 \

14 -ac 1 \

15 "${OUTPUT_NAME}_processed.wav"

16

17# Step 2: Transcribe with Whisper

18whisper "${OUTPUT_NAME}_processed.wav" \

19 --model medium \

20 --output_format txt,srt,json \

21 --language Thai \

22 --initial_prompt "This is a technical conference presentation"

23

24# Step 3: Clean up

25rm "${OUTPUT_NAME}_processed.wav"

26

27echo "Transcription complete: ${OUTPUT_NAME}.txt, ${OUTPUT_NAME}.srt"

Workflow 2: Multilingual Content

1#!/bin/bash

2# multilingual_transcription.sh

3

4INPUT_FILE="$1"

5

6# Extract audio

7ffmpeg -i "$INPUT_FILE" -vn -ar 16000 temp_audio.wav

8

9# Detect language and transcribe

10whisper temp_audio.wav --model medium --output_format json

11

12# Also create English translation

13whisper temp_audio.wav --task translate --output_format srt --model medium

14

15# Clean up

16rm temp_audio.wav

Best Practices

Audio Quality Guidelines

- Sample Rate: 16kHz is optimal for Whisper

- Format: WAV or high-quality MP3

- Mono vs Stereo: Mono is sufficient for speech

- Bit Depth: 16-bit is adequate

Model Selection Strategy

- tiny/base: Quick drafts, real-time processing

- small/medium: Balanced accuracy/speed for most use cases

- large: Maximum accuracy for important content

File Organization

1project/

2├── original_files/

3├── processed_audio/

4├── transcriptions/

5│ ├── txt/

6│ ├── srt/

7│ └── json/

8└── scripts/

Useful FFmpeg Commands for Whisper Prep

Audio Extraction and Conversion

1# Extract best quality audio

2ffmpeg -i input.mkv -q:a 0 -map a output.mp3

3

4# Convert to Whisper-optimal format

5ffmpeg -i input.mp4 -vn -ar 16000 -ac 1 -c:a pcm_s16le output.wav

6

7# Extract audio from specific timeframe

8ffmpeg -i input.mp4 -ss 00:10:00 -to 00:20:00 -vn output.wav

Audio Enhancement

1# Normalize and filter

2ffmpeg -i input.mp3 -af "dynaudnorm=f=75:g=25,highpass=f=80,lowpass=f=8000" clean.wav

3

4# Reduce noise (basic)

5ffmpeg -i noisy.wav -af "afftdn" denoised.wav

Performance Monitoring

Check Processing Time

1# Time the transcription

2time whisper audio.wav --model base

3

4# Monitor GPU usage (if using CUDA)

5nvidia-smi

Batch Processing with Progress

1#!/bin/bash

2total_files=$(ls *.mp4 | wc -l)

3current=0

4

5for file in *.mp4; do

6 ((current++))

7 echo "Processing file $current of $total_files: $file"

8

9 ffmpeg -i "$file" -vn -ar 16000 temp.wav

10 whisper temp.wav --output_format srt --model base

11 rm temp.wav

12

13 echo "Completed: ${file%.*}.srt"

14done

Integration Tips

With Video Editors

- Export SRT files for Premiere Pro, DaVinci Resolve, etc.

- Use VTT format for web players

- JSON format provides detailed timing for custom applications

With Automation Scripts

1# Python automation example

2import subprocess

3import os

4

5def process_video(input_path, model="base"):

6 # Extract audio

7 audio_path = f"{os.path.splitext(input_path)[0]}.wav"

8 subprocess.run([

9 "ffmpeg", "-i", input_path, "-vn",

10 "-ar", "16000", "-ac", "1", audio_path

11 ])

12

13 # Transcribe

14 subprocess.run([

15 "whisper", audio_path, "--model", model,

16 "--output_format", "srt,txt"

17 ])

18

19 # Clean up

20 os.remove(audio_path)

Troubleshooting

Common Issues and Solutions

| Issue | Solution |

|---|---|

| "No module named whisper" | pip install openai-whisper |

| FFmpeg not found | Add FFmpeg to system PATH |

| Poor transcription quality | Try larger model, improve audio quality |

| Out of memory | Use smaller model or process shorter segments |

| Wrong language detected | Specify --language parameter |

| No timestamps in output | Use SRT, VTT, or JSON format |

Audio Quality Issues

1# Check audio properties

2ffprobe -v quiet -show_format -show_streams input.mp4

3

4# Test with sample

5ffmpeg -i input.mp4 -t 30 -vn sample.wav

6whisper sample.wav --model base

Quick Reference Commands

Essential Commands

1# Basic transcription

2whisper file.mp4

3

4# High-quality subtitles

5whisper file.mp4 --model large --output_format srt

6

7# Extract audio + transcribe

8ffmpeg -i video.mp4 -vn audio.wav && whisper audio.wav --output_format srt

9

10# Batch process current directory

11for f in *.mp4; do whisper "$f" --output_format srt; done

12

13# Foreign language with translation

14whisper foreign.mp3 --task translate --output_format srt

Useful FFmpeg Audio Processing

1# Optimize for speech recognition

2ffmpeg -i input.mp4 -af "dynaudnorm,highpass=f=80,lowpass=f=8000" -ar 16000 -ac 1 optimized.wav

3

4# Split long audio into segments

5ffmpeg -i long_audio.mp3 -f segment -segment_time 600 -c copy segment_%03d.mp3

6

7# Merge multiple audio files

8ffmpeg -f concat -safe 0 -i filelist.txt -c copy merged.mp3

Note: Processing time varies significantly based on audio length, model size, and hardware. The large model provides the best accuracy but requires substantial computational resources.